Abstract

The design and performance of the inner detector trigger for the high level trigger of the ATLAS experiment at the Large Hadron Collider during the 2016–2018 data taking period is discussed. In 2016, 2017, and 2018 the ATLAS detector recorded \(35.6~\mathrm {fb}^{-1}\), \(46.9~\mathrm {fb}^{-1}\), and \(60.6~\mathrm {fb}^{-1}\) respectively of proton–proton collision data at a centre-of-mass energy of 13 TeV. In order to deal with the very high interaction multiplicities per bunch crossing expected with the 13 TeV collisions the inner detector trigger was redesigned during the long shutdown of the Large Hadron Collider from 2013 until 2015. An overview of these developments is provided and the performance of the tracking in the trigger for the muon, electron, tau and b-jet signatures is discussed. The high performance of the inner detector trigger with these extreme interaction multiplicities demonstrates how the inner detector tracking continues to lie at the heart of the trigger performance and is essential in enabling the ATLAS physics programme.

Similar content being viewed by others

1 Introduction

The trigger system is an essential component of any collider experiment – especially so for a hadron collider – as it is responsible for deciding whether or not to keep an event from a given bunch-crossing interaction for later study. During Run 1 (2009 to early 2013) of the Large Hadron Collider (LHC) [1], the ATLAS detector [2] trigger system [3,4,5,6,7,8,9,10] operated efficiently at instantaneous luminosities of up to \(8\times 10^{33}\) cm\(^{-2}\)s\(^{-1}\) and primarily at centre-of-mass energies, \(\sqrt{s}\), of 7 \(\text {TeV}\) and 8 \(\text {TeV}\). In Run 2 (2015–18) the increased centre-of-mass energy of 13 \(\text {TeV}\), higher luminosity and increased number of proton–proton interactions per bunch-crossing (pile-up) required a significant upgrade to the trigger system. This was necessary to avoid the required processing time exceeding that available when running with the trigger thresholds that are needed to satisfy the ATLAS physics programme. For this reason, the first long shutdown between LHC Run 1 and Run 2 was used to improve the trigger system with almost no component left untouched.

Final states involving electrons, muons, taus, and b-jets [8, 11,12,13,14,15], provide important signatures for many precision measurements of the Standard Model and searches for new physics. The ability of the ATLAS trigger to process information from the inner detector (ID) [16] to reconstruct particle trajectories is an essential requirement for the efficient triggering of these objects at manageable rates. Typical rate rejection factors possible using tracking information from the ID trigger range very approximately from 50 to 100 for the electron and \(b\text {-jet}\) triggers, and from 3 to 10 for the muon and tau triggers. Without this rate rejection from the ID trigger it would not be possible to achieve the goals of the ATLAS physics programme. The ID trigger must therefore be able to reconstruct tracks with high efficiency across the entire range of possible physics signatures, under all expected circumstances. This challenge is exacerbated by the extremely high track and hit occupancies in the ID that arise for the large pile-up multiplicity present during LHC running at the highest operation intensities.

The performance of the ATLAS trigger system for the lower luminosity running in 2015 has been reported previously [4], and included a brief description of the ID trigger design. In this paper the full details of the ID trigger design and implementation are presented, followed by a discussion of the execution time of the different components of the ID trigger and the full performance in terms of efficiency and resolution from the 2016–2018 running period.

2 The ATLAS detector, trigger and data acquisition systems

The ATLAS detector [2] at the LHC is a multi-purpose particle detector with a forward–backward symmetric cylindrical geometry and a near 4\(\pi \) coverage in solid angle around the collision point.Footnote 1 It consists of an inner tracking detector surrounded by a thin superconducting solenoid, providing a 2 T axial magnetic field, electromagnetic and hadronic calorimeters, and a muon spectrometer incorporating large superconducting toroidal magnets. Owing to the cylindrical geometry, subdetector components generally consist of a barrel region with central pseudorapidities close to zero, and two endcap components, one each in the forward regions with large absolute pseudorapidity.

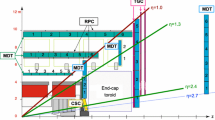

The inner detector, shown schematically in Fig. 1, allows the precise reconstruction of charged-particle trajectories in the pseudorapidity range \(|\eta | < 2.5\). It consists of three subsystems: the high granularity silicon pixel detector, the silicon microstrip tracker, and the transition radiation tracker. The pixel detector consists of three barrel layers, and three layers in each endcap, and an insertable B-layer (IBL) [17, 18] closest to the interaction point, installed before Run 2. The IBL spans the entire pseudorapidity range of the inner detector. The pixel detector typically provides four measurements per track. The pixel detector is surrounded by the silicon microstrip tracker (SCT) [19, 20], which consists of double layer silicon strip detectors, four in the barrel, and nine in each endcap. These provide axial hits, or azimuthal hits in the endcaps, and small-angle stereo hits used to provide improved spatial resolution in the longitudinal direction along the modules. The SCT typically provides eight measurements per track. The silicon detectors are complemented by the transition radiation tracker (TRT) [21,22,23], consisting of multiple layers of straw cylindrical drift tubes filled with either a Xe–\(\hbox {CO}_2\)–\(\hbox {O}_2\), or Ar–\(\hbox {CO}_2\)–\(\hbox {O}_2\) mixture, and which enables radially extended track reconstruction up to \(|\eta | = 2.0\).

The calorimeter system covers the pseudorapidity range \(|\eta | < 4.9\). Within the region \(|\eta |<3.2\), electromagnetic calorimetry is provided by barrel and endcap high granularity lead/liquid-argon (LAr) calorimeters, with an additional thin LAr presampler covering \(|\eta | < 1.8\) to correct for energy loss in material inside the calorimeter radius. Hadronic calorimetry is provided by the steel/scintillator-tile calorimeter, and two copper/LAr hadronic endcap calorimeters. The more forward regions have copper/LAr and tungsten/LAr calorimeter modules optimised for electromagnetic and hadronic measurements respectively.

The muon spectrometer (MS) [24] is composed of separate trigger and high-precision tracking chambers measuring the deflection of muons in a magnetic field generated by superconducting air-core toroids. The field integral of the toroids ranges between 2.0 Tm and 6.0 Tm across most of the detector. Precision chambers cover the region \(|\eta | < 2.7\) and are complemented by cathode-strip chambers in the forward region. The muon trigger system [3, 4, 25] covers the range \(|\eta | < 2.4\) with resistive-plate chambers in the barrel, and thin-gap chambers in the endcap regions.

Interesting events are selected to be recorded by the first level trigger system (Level 1, L1) [3, 26] implemented in custom hardware, followed by selections made by algorithms implemented in software in the high level trigger (HLT) [3, 4]. The L1 trigger processes events at the 40 MHz bunch crossing rate. Events are accepted at a rate below 100 kHz, which the HLT further reduces in order to record events to permanent storage at approximately 1.2 kHz [27].

The ATLAS trigger and data acquisition (TDAQ) system used during Run 1 [3, 28] consisted of a multi-level trigger system with a hardware, pipelined Level 1 trigger and two large farms of commodity CPUs, one for the Level 2 (L2) software trigger, and the second for the third level, event filter (EF) processing. In Run 1 the separate L2 and EF farms collectively constituted the HLT system. For the L1 trigger operation, a reduced granularity subsample of the data from the calorimeters and the muon spectrometer are read out and processed in a custom read-out path and sent to the off-detector hardware L1 processors. In parallel, the data from the entire detector are stored in custom on-detector pipelined read-out buffers (ROBs) [29] ready to be read out in the eventuality of a L1 trigger accept decision. Upon such a decision, the pipelines are stopped and the data for the corresponding bunch crossing is read out from the ROBs to the off-detector read-out subsystem, ready for distribution to the farm of the HLT processing nodes. Because of the extremely high data volume in the SCT and pixel detectors, these can be read out only following an L1 accept and therefore the first stage at which tracks could be reconstructed in the silicon layers during Run 1 was at Level 2. The coarse granularity muon spectrometer or calorimeter data read out for the L1 trigger are used to reconstruct objects of interest, such as potential track segments in the muon spectrometer from muon candidates, or high energy clusters reconstructed in the calorimeter. These objects are used to define regions of interest (RoI) in the detector which are worth reconstructing with the data read out at the full granularity. Using RoI typically reduces the amount of data to be transferred and processed in the HLT to between 2%–6% of the total data volume [6].

For Run 2, the LHC increased the centre-of-mass energy in pp collisions from 8 to 13 \(\text {TeV}\), with a reduction of the nominal bunch spacing from 50 to 25 ns [30] and an increase in the beam intensity per bunch crossing. This increase lead to a significant increase in the mean pile-up interaction multiplicity per bunch crossing, with correspondingly higher track and hit multiplicities.

The increase in beam energies meant that the trigger rates were on average a factor of 2–2.5 times larger for the same luminosity and with the same trigger criteria [4]. In order to prepare for the significantly higher expected rates in Run 2, several trigger upgrades were implemented during the 2013–2015 long shutdown, which are summarised here.

For Run 2, the separate L2 and EF farms were combined into a single, homogeneous HLT farm [31]. During Run 1, Level 2 requested only partial event data to be sent over the network, while the event filter operated on the full event information assembled by separate farm nodes dedicated to event building [32]. For Run 2, merging the separate farms into a single homogeneous farm allowed for better resource sharing and an overall simplification of both the hardware and software. To achieve higher read-out and output rates, the read-out subsystem, data collection network, and data storage system were upgraded [33]. The on-detector front-end electronics and detector-specific read-out systems were not changed for Run 2 operation in any significant way.

During Run 2, the typical mean processing time for the complete HLT processing per event was between 0.4 and 0.8 s for the higher pile-up at the start of a fill, decreasing to around 200 ms for lower pile-up.

3 The inner detector trigger

The HLT is the first level where information from the silicon detectors in the ID is available in the trigger. The track reconstruction in the HLT is then performed either within an RoI identified at L1 or for the full detector. Throughout Run 1 and Run 2, the HLT ran progressively more complex algorithms to reduce the rate of events to be processed successively throughout the HLT. Starting from the initial input rate determined by the L1 trigger accept rate, the fastest algorithms were executed first to reduce that rate at which these more complicated algorithms needed to run. To achieve this, the reconstruction in the HLT generally consists of a two-stage approach with a fast track reconstruction stage to reject the less interesting events, followed by a slower, precision reconstruction stage – the precision tracking – for those events remaining after the fast reconstruction. For both Run 1 and Run 2, each of the initial RoI used in the HLT is wedge shaped, extending in z along the full 450 mm interaction region at the beam line and opening out in \(\eta \) and \(\phi \). This large extent in z is required since the z position of the interaction is not known before the tracking itself has been executed.

During the 2013–15 LHC long shutdown, many changes were implemented in the ID trigger to better handle the changing run conditions expected in Run 2. To motivate these changes, Sect. 3.1 briefly summarises the tracking strategy used during Run 1 and is followed in Sect. 3.2 by an explanation of the developments implemented for Run 2 and why they were needed. The subsequent sections describe in detail the structure and algorithms used in the ID trigger during Run 2.

3.1 The inner detector trigger for Run 1

For Run 1 the ID tracking in the trigger [3, 34, 35] ran the fast reconstruction stage on the dedicated L2 CPU farm and the precision tracking stage on the dedicated Event Filter CPU farm. This division meant that the information passed between the different trigger levels was essentially limited to the RoI within which the reconstruction should run, necessitating that all stages in the track reconstruction, including any data preparation, be performed independently in each of the two trigger levels.

At L2 the tracking consisted of several alternative custom pattern recognition algorithms and tracking algorithms, which were all preceded by a fast data preparation stage to read out any data from the pixel detector and SCT, and prepare the space-points. To optimise the tracking efficiency, the best-performing pattern recognition algorithm was chosen independently for each signature. Following the pattern recognition, track candidates were then identified using a fast Kalman filter track fit [36] and the tracks could be extended into the TRT.

In the event filter, the precision tracking stage (referred to as EFID during Run 1) ran offline tracking algorithms adapted for use in the trigger. Starting with the offline data preparation, the tracking continued with the offline pattern recognition, followed by the ambiguity solver [37] which resolves any ambiguity with respect to duplicated hits or hits falsely attributed to tracks, ranking the tracks and rejecting those where the track quality is deemed insufficient. A more detailed exposition of the ID trigger used during Run 1 can be found elsewhere [35].

The processing time for the Run 1 precision tracking pattern recognition stage (a), and the processing time of the ambiguity solver stage from the Run 1 precision tracking (b). In both cases the processing time is shown before and after the improvements to the algorithms implemented during the 2013–2015 long shutdown

The reduction in the latency for the Run 2 ID trigger strategy with respect to the Run 1 strategy: a the total time for the isolated 24 G\(\text {eV}\) electron trigger with the Run 1 and Run 2 strategies; b the time taken by the ambiguity solver in the Run 1 and Run 2 strategies. In all cases, the improved offline code implemented late in 2014 has been used in the precision tracking stage

Schematic illustrating the single-stage tracking for a single RoI. Here single-stage refers to the single processing of a specific RoI, rather than the separate steps in the track processing itself. Selection can be applied in hypothesis algorithms (hypos) which reduce the rate for processing the later steps

3.2 Evolution of the inner detector trigger strategy from Run 1 to Run 2

The general purpose in running the event filter during Run 1 was to confirm the objects reconstructed and selected by Level 2, and to improve on the precision of their reconstruction, so allowing for the application of tighter selection to further reduce the rate. For the Run 1 trigger, the events processed by the event filter were necessarily required to have passed the Level 2 selection and consequently, running any algorithm in the event filter that was more efficient than its Level 2 counterpart was in some sense unnecessary, as any event that had not passed the Level 2 stage would have already been discarded. However, the longer processing times available for the offline algorithms executed in the Run 1 event filter afforded by the lower processing rate meant that the EF tracking was indeed more efficient than that in Level 2, but that this additional efficiency could not be exploited as the events would have already been rejected by the Level 2 trigger.

As mentioned in Sect. 2, for Run 2, the previously separate L2 and EF processing stages were merged to instead run as part of the same process on individual HLT nodes within the HLT farm. This merging allows information from algorithms that previously ran in the L2 reconstruction stage to more easily be used later in the processing chain, thus removing any need to run separate, duplicated data preparation and pattern recognition stages for the tracking.

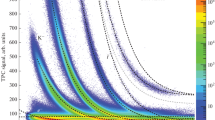

The performance of the offline tracking algorithms as used in the precision tracking for the EF trigger in Run 1 was studied in detail under Run 2 conditions to determine which aspects of the offline tracking could be retained for the ID trigger in Run 2. Figure 2 shows the time taken by the different stages of the EF tracking from the Run 1 ATLAS muon trigger as a function of the mean pile-up interaction multiplicity, \(\langle \mu \rangle \), expected during Run 2 and beyond, using simulated \(Z\rightarrow \mu ^+\mu ^-\) events with a 14 T\(\text {eV}\) centre-of-mass energy.Footnote 2 Under these Run 2 conditions, the pattern recognition stage of the offline tracking can be seen to constitute more than 60% of the total time of the Run 1 precision tracking with a pile-up of 70 interactions per bunch crossing and does not scale linearly with the pile-up multiplicity.

In addition to the constraints on the trigger system in Run 2, there are strong constraints on the time taken to perform the offline reconstruction. For this reason an extensive programme of software optimisation [38, 39] was undertaken between Run 1 and Run 2. This provided tangible benefits for the ID trigger for those algorithms shared with the offline reconstruction. Improvements in the execution time resulted from improvements to the computing infrastructure – namely the switch to running on a 64-bit, rather than a 32-bit kernel, and using a newer compiler: GCC 4.8 rather than 4.3 – and the replacement of the CLHEP [40] linear algebra library, by the Eigen library [41].

These consequently led to a reduction in the latency of both the pattern recognition and track fitting stages for offline tracking used in the Run 1 precision tracking. The effect on the processing times following these improvements for their modified use in the trigger can be seen in Fig. 3. This shows a clear improvement in the Run 1 pattern recognition strategy and ambiguity solver processing times by factors of approximately three and ten respectively, when running on simulated \(Z\rightarrow e^+e^-\) events.

Despite this significant improvement in processing time, the offline pattern recognition was still too time consuming to be used in the trigger with the increase in the pile-up multiplicity expected for Run 2. As a consequence, the chosen strategy for the ID trigger for Run 2 was to reuse the output from the pattern recognition for the fast tracking by using the identified tracks and hits to seed the ambiguity solver directly, so completely removing the need to run the offline pattern recognition stage in the trigger.

Schematic illustrating a the track seed formation in radial bins and azimuthal sectors, where the midpoint sector for a triplet is the sector containing the midpoint, and where the inner and outermost hits are allowed to originate in the same sector, or the the adjacent sectors, and b track seed formation in the r–z plane, where the extrapolated z position of the triplet at the beamline can be restricted to within a specific z region

The result of the improvement can be seen in Fig. 4 which shows, for the same simulated \(Z\rightarrow e^+e^-\) events, the total time required for the full 24 G\(\text {eV}\) isolated electron trigger including the calorimeter reconstruction – in this case comparing the Run 2 strategy described above, with that from Run 1, but with the upgraded offline tracking algorithms.

Even with the complete reconstruction of the signature, including the time to run the calorimeter and the space-point reconstruction, the Run 2 strategy is still approximately three times faster than the Run 1 strategy.

Also illustrated in Fig. 4 is the execution time for the improved ambiguity solver code with both the Run 1 and Run 2 strategies. The Run 2 strategy is seen to be approximately three times faster than that used in Run 1. This is a consequence of the track selection and reduction having been already performed by the fast tracking so that the ambiguity solver is not required to perform as much computation as it would if running on the greater number of track candidates that would arise from the offline pattern recognition.

Schematic illustrating the multistage tracking. Here multistage refers to the multiple passes of the track processing over specific parts of the detector, perhaps with an updated, or otherwise modified RoI. As in the single-stage case, selection can be applied in hypothesis algorithms (hypos) between any of the tracking steps in the two stages

3.3 The inner detector trigger in Run 2

For Run 2, the division of the tracking into fast tracking and precision tracking stages was retained: the fast tracking stage consisting of a newly implemented, trigger-specific pattern recognition stage, based on a hybrid of those used at L2 during Run 1, and the precision tracking stage again relying heavily on offline tracking algorithms, but seeded with information from the fast tracking stage [4, 16]. As in Run 1, the inner detector data preparation reconstructs clusters, and space-points from the silicon clusters, using the information from the pixels and the SCT strips [42]. The clusters and spacepoints are used for the remainder of the track reconstruction. Since the fast and precision tracking steps were both running as aspects of the same process on single CPU nodes, they can share a single silicon data preparation step.

The generic Run 2 tracking strategy is shown schematically in Fig. 5. This illustrates how the tracking is split into separate steps, which can be separated by additional non-tracking-related algorithms to reconstruct additional features, and hypothesis algorithms to select objects which satisfy certain selection criteria. These are used to reduce the rate of RoI to be processed between the fast track finder and precision tracking steps.

3.3.1 Data preparation

The pixel and SCT data preparation consists of decoding hits in the silicon modules of the inner detector from a byte stream format, grouping these hits into clusters [42] – necessary because particles deposit charge in several adjacent silicon cells – and forming space-points from the clusters. These represent points in three-dimensional space which are used for the pattern recognition, the constituent clusters themselves being used for the actual track fitting.

The data preparation employs the RoI mechanism, described later in this section, which allows the trigger to request only the data from those silicon modules falling within an RoI, saving both processing time, and data transfer bandwidth [43]. Following the L1 accept the data for each detector are read out to the ROB system, but to reduce the bandwidth only those ROBs containing data corresponding to an RoI need be interrogated for read-out to the HLT.

The computational complexity of the decoding algorithms for both the pixel and SCT detector subsystems is linear with respect to the number of data words, containing the encoded detector data from the subsystem. The decoders iterate over ROB data fragments, and over the data words within each fragment. While looping through an individual fragment, the algorithm maintains information regarding which module it is decoding, assigning raw data objects (RDOs) – in this case, decoded hits – to an appropriate in-memory container. Error information can also be encoded in the byte stream, but this is a fringe case which is not discussed here. Once the data words have been decoded and RDOs created and mapped to their appropriate modules, the clustering algorithm takes over, grouping adjacent hits within a module into clusters, referred to as reconstruction input objects. The clustering algorithms for the pixel and SCT detectors are similar in principle, but differ significantly in complexity. Since it operates in only one dimension, the SCT clustering algorithm complexity is linear with respect to the number of hits per module. It consists of a single loop over the SCT strips, accumulating those which are adjacent and active into clusters. The pixel clustering algorithm operates in two dimensions, and so with quadratic complexity, consisting of a double loop over each module, generating clusters for each hit and merging clusters with shared hits where necessary.

Finally, the pixel and SCT clusters are converted to space-points by means of a simple geometric transformation. The pixel clusters are rotated according to the module orientation and then offset by the module position in the global ATLAS coordinate system. The cluster positions in the ATLAS coordinate system are obtained using the module geometry determined by the offline alignment procedure [44]. In the SCT case, one of the modules in each back-to-back module pair is rotated at a 40 mrad stereo angle to provide longitudinal information along the module. Clusters from each side of the module are combined into pairs and two-dimensional local positions are derived from these combinations, after which the rotation and translation procedure is identical to that for the pixels. During the decoding the data are checked for errors to ensure increased robustness against detector failures, and the decoded data from each module are cached to remove the need to decode again, should they be requested multiple times.

The TRT data preparation is performed only for the precision tracking in the RoI for which the TRT track extension is included. As in the pixel and SCT case, the data preparation consists of the TRT byte stream decoding and creation of RDOs with the drift time information. The drift time measurements are then converted to the drift distances which are stored in the output TRT reconstruction objects.

3.3.2 Fast tracking

For Run 2, the fast track finder (FTF) [16] was developed to provide track candidates for use early in the trigger. These track candidates are then used to seed the precision tracking stage. Since the precision tracking provides the track candidates used for the final objects selection in the trigger, the FTF design prioritises track finding efficiency over purity. During the FTF pattern recognition, a search for triplets of space-points (track seeds) is performed in bins of r and approximately 50 sectors in \(\phi \), where triplets may also be formed using hits in adjacent sectors at more positive, or more negative, \(\phi \), as illustrated schematically in Fig. 6. The innermost hits from the initial triplets must originate in the pixel detector. Triplet formation starts from a middle space-point and selects outer and inner space-points at larger and smaller radii, respectively. Also illustrated in Fig. 6, the inner and outer pairs of space-points must be compatible with the nominal interaction region along the beam line. In the case of tracking performed within an RoI, this region can be replaced by a restricted z-region of the RoI along the beam line if some information about the z position of the interaction is available – the RoI itself is used to pass external information about the expected track z interval to the track seeding algorithm. The triplet track parameters \(\phi _0\), transverse momentum \(p_{\text {T}}\), and transverse impact parameter at the point of closest approach to the beam line \(d_0\), are estimated using a conformal transformation [45], with the transformation centre placed at the middle space-point and applying cuts on \(d_0\) and \(p_{\text {T}}\). The track transverse momentum parameter from the track reconstruction is signed by the track charge. In practice, z information for the refinement of the RoI at the beam line is only available with high enough resolution after some version of the tracking itself has run.

Initial track candidates are then formed from the track seeds using a simple track finding algorithm which extends the track candidates into further layers to find additional hits, using the offline track-following algorithm [46], but with a modified configuration for faster execution. The track-following algorithm extends track candidates into adjacent layers to identify additional hits, refitting the track candidates as new hits are added. An algorithm to remove duplicate tracks which share track seeds is applied, retaining those tracks of higher quality. These preliminary tracks are then passed to a fast Kalman filter track fitter [47]. To reduce the processing time, hits from the TRT are not used in the FTF.

During the initial track finding, track candidates that have too large an absolute value of \(d_{0}\) are rejected in order to keep the contribution from fake tracks to a manageable level. For the muon signature, where the muon candidates are used to seed the B-physics signature which reconstructs hadronic resonances with a displaced secondary vertex, the maximum allowed \(|d_{0} |\) is 10 mm. For all other signatures, a maximum of 4 mm is used.

For some signatures, discussed later, a two-stage tracking strategy is used, running additional tracking stages in an updated RoI. In such a case, where the z range of the RoI along the beam line is restricted, the FTF triplet seed formation employs an additional selection where the individual space-point doublets used to construct the triplets are rejected if they do not point back approximately to the restricted region in z.

Schematic illustrating the RoI from the single-stage and two-stage tau lepton trigger tracking, shown in plan view (x–z plane) along the transverse direction and in perspective view. The z-axis is along the beam line. The combined tracking volume of the 1st and 2nd stage RoI in the two-stage tracking approach is significantly smaller than the RoI in the single-stage tracking scheme

3.3.3 Precision tracking

The precision tracking stage takes the FTF tracks as input, and applies a version of the offline tracking algorithms [37, 48] configured to run online in the trigger [49, 50]. This includes a sophisticated algorithm for rejection of duplicate track candidates and clusters previously assigned to tracks but which lie too far from the track trajectory. In addition, the track candidates are extended into the TRT in an attempt to select TRT hits at larger radii to improve the track momentum resolution. The final ID track fit is then performed using a more precise global \(\chi ^2\) fitter algorithm [51] from code used for offline reconstruction. These stages are combined as aspects of the offline ambiguity solver algorithm discussed in Sect. 3.1.

Since the rate of event processing for the precision tracking is generally significantly lower than for the fast tracking, more detailed handling of the detector conditions is possible. This includes better compensation for detector effects such as inactive sensors or calibration corrections. The resulting precision tracks are therefore much closer in performance to the offline tracks than the fast tracks. By necessity, the precision tracking must generally make use of the detector conditions as they are known during the initial data taking for the run, and, unlike the offline tracking, cannot benefit from the improved knowledge of those conditions obtained during the subsequent offline processing. One exception where information must be updated during the data taking concerns knowledge of the beam line position. As the subsequent offline beamspot information is not available, a custom beam line vertex trigger is used, which runs the fast tracking, and then uses these tracks to run a fast vertex algorithm to establish the interaction region position in the transverse plane as a function of the z position itself. This is then used to update the beam line position used online whenever this position has moved by a predetermined amount [3, 27].

Since the precision tracking uses the tracks and clusters identified by the fast tracking, the precision tracking efficiency, by construction, cannot exceed that of the fast tracking. Overall, the primary purpose of the precision tracking is to perform a higher quality fit to improve the purity and quality of the trigger tracks relative to those tracks reconstructed offline.

3.3.4 Vertex reconstruction

During Run 2, two vertex algorithms were used online: a histogramming based algorithm, and the offline iterative vertex algorithm [52, 53]. Typically, trigger signatures use only the offline vertex algorithm. For the case of the \(b\text {-jet}\) trigger [10] however, both vertex algorithms were employed to maximise the vertex finding efficiency. The simple histogramming algorithm functions by first histogramming the \(z_0\) position for the point of closest approach to the beam line of each track. It then calculates the vertex z position using the mean of the bin centres weighted by the number of tracks in each bin for the group of adjacent bins within the 1 mm sliding window which contain the largest number of tracks. All tracks passing some basic quality selection are used and are weighted equally. The second algorithm, based on the offline vertex finder, with some minor modifications to run online, iteratively clusters tracks to determine the positions of vertex candidates, sorting the resulting vertex candidates by the sum of the squared transverse momenta of the tracks assigned to each vertex.

In both cases, the vertex algorithm runs only on tracks that have been reconstructed in the relevant RoI of the track finding. For the leptonic triggers the offline based algorithm is usually executed using the precision tracks from the final stage of processing. For the \(b\text {-jet}\) trigger, both vertex algorithms are executed using tracks from a specific additional vertex tracking stage before the dedicated tracking in the \(b\text {-jet}\) RoI.

3.4 Multistage tracking

Although the fast and precision tracking run in distinct stages or steps – often separated by additional non-tracking algorithms and event rejection – running both algorithms sequentially in a single RoI is in general considered to be processing in a single tracking stage. This is because there is only a single pass through the full set of tracking algorithms over any given RoI. Where multiple passes of aspects of the tracking are intentionally performed over similar, but not identical, regions of the detector, and where the second pass is in a different RoI constructed to overlap with, extend, or update the RoI of the first pass, this is referred to as multistage tracking. In this case, each set of steps within a specific RoI constitutes a single tracking stage. Such a case might be where the first stage runs the fast tracking in a narrow RoI, and a subsequent stage again runs the fast tracking in a new RoI, along the same direction, but wider. This is illustrated for a generic multistage signature in Fig. 7.

For the hadronic tau trigger, it is useful to run the tracking in a larger RoI than that used, for instance, in the electron trigger, to allow for the opening angle of the tracks from three-prong tau decays [8, 13]. For Run 1, this was done by using a single RoI, wider in \(\eta \)–\(\phi \) but fully extended along the beam line, which consequently was one of the most time-consuming aspects of the tracking in Run 1. To limit the tracking CPU usage in the wider RoI, a two-stage processing approach was implemented for the tau trigger in Run 2. In the first stage, the position of the tau event vertex along the beam line is identified by executing the fast tracking in a narrow RoI with a full width of 0.2 in both \(\eta \) and \(\phi \), directed towards the tau candidate calorimeter cluster, but fully extended along the beam line in the range \(|z|<{225}\,\hbox {mm}\) to identify the leading \(p_{\text {T}}\) tau decay tracks. The second stage executes the fast tracking again, followed by the precision tracking for the tracks found in this second fast tracking stage, but this time in a wider RoI with a full width 0.8 in both \(\eta \) and \(\phi \), centred on the z position of the leading track identified by the first stage and limited to \(|\Delta z|<{10}\,\hbox {mm}\) relative to this leading track. The RoI from these different single-stage and two-stage strategies are illustrated schematically in Fig. 8.

For comparison purposes, during the commissioning for the Run 2 data taking, the tau lepton triggers were also executed in a single-stage mode, similar to that used for Run 1 [54], running the fast track finder only once in a wide RoI in \(\eta \) and \(\phi \) and fully extended along the beam line, followed by the precision tracking again in this same RoI. As a result of the time saved in the processing of the second stage because of the narrow z extent along the beam line, the actual \(\eta \)–\(\phi \) width of the second stage RoI can be made larger than would otherwise be possible running only in a single stage with the full z extent.

In Run 1, the b-jet trigger ran the full tracking individually in the full z-width RoI centred on jets from the trigger [9] with a wide \(\eta \)–\(\phi \) extent. However, for Run 2, the increased beam energy and interaction multiplicity meant that this approach was inappropriate, resulting in too many RoI with too large an overlap such that the degree of processing of overlapping parts of the detector became prohibitive.

Consequently, for the Run 2 b-jet trigger [10] a new multistage tracking strategy was adopted with a new initial tracking stage, running tracking specifically to identify the likely event vertex z position for use in the second stage. For the second stage, separate RoI about each jet axis are used as in Run 1, but with each RoI specified more tightly at the beam line about the z vertex position identified in the first stage. For the tracking in the initial vertex stage, all jets identified by the jet trigger with transverse energy \(E_{\text{ T }} >{30}\,\text{ G }\text{ eV } \) are considered, and tracks are reconstructed with the fast tracking algorithm in a narrow region with full widths of 0.2 in \(\eta \) and \(\phi \) around the jet axis for each jet, but with \(|z|<{225}\hbox { mm}\) along the beam line.Footnote 3 In order to prevent the multiple processing of regions of the detector that overlap, before running the fast tracking, the RoI about each jet axis are first aggregated into a single super RoI for the event. This super RoI is then used by the data preparation of the first stage vertex tracking to determine which detector elements – in this case individual silicon modules from the pixel and SCT detectors – should be read out for the subsequent processing. This happens only once per event for the single super RoI, which can include non-overlapping regions. This is illustrated in Fig. 9, which shows the aggregation of the individual narrow jet RoI into a single, more complicated region used by the data preparation. With only the data from these detector elements corresponding to the RoI about the jet directions, the tracking then runs as if it were processing the entire detector.

Following this stage, the tracks identified in the super RoI are used for the primary vertex reconstruction [55]. This vertex is used to define wider RoI about each jet axis, with \(|\Delta \eta |<0.4\) and \(|\Delta \phi |<0.4\) with respect to the jet axis, but with \(|\Delta z|<{10}\hbox { mm}\) relative to the primary vertex z position. These RoI are then used for the second-stage reconstruction which again runs the fast tracking but this time in the wider \(\eta \) and \(\phi \) regions about the jets. This is then followed by the precision tracking, secondary vertexing and b-tagging algorithms. For the vertex stage, different vertex algorithms are available. As in the case of the tau multistage tracking, the time saved by running the jet tracking in very narrow RoI in z about the vertex position allows the jet tracking stage to execute in a wider RoI than would be possible with a single-stage processing.

In Run 2 this same approach was also adopted for some of the standard jet triggers in order to be able to run the global sequential calibration [56]. This makes use of the information from the precision track reconstruction in the jets to improve the jet energy resolution measured with respect to the offline jets. This allows a tighter selection on the jet transverse momentum [57, 58], closer to that used offline, thus reducing the rate from the jet trigger.

4 Inner detector trigger timing

In this section, timing measurements for the tracking related algorithms used in the inner detector trigger in Run 2 are presented. Timing measurements for the different tracking related algorithms were taken online, using the trigger cost monitoring framework [27]. Unless otherwise stated, the timing measurements were recorded during a typical physics run, on Saturday, September 29th, 2018 with a mean pile-up interaction multiplicity of 52 at the start, and 19 at the end of the run. The full timing distributions are presented for the start of the run only.

The retrieval and data preparation for the silicon detectors prior to running the tracking algorithms themselves is typically reasonably fast, but is still a significant contribution to the overall processing time. The subsequent fast track reconstruction then precedes the execution of hypothesis algorithms which combine the data from the calorimeters or muon spectrometer with the ID tracks to further reduce the rate. Following this reduction, the precision tracking stage is executed, and the precision tracks can be extended into the TRT, which requires the TRT data preparation and track extension algorithms. Subsequent algorithms may use the tracks in combination with clusters reconstructed in the calorimeter, or perform a combined fit using the information from the muon spectrometer and the inner detector. A discussion of some of these other algorithms can be found elsewhere [59, 60].

A vertex finding algorithm can also be executed using the tracks from any stage in the processing, typically following the precision tracking, but also using the tracks from the fast track finder, for example, following the b-jet vertex tracking. In the case of the multistage tracking, the data preparation may be executed a second time in the wider regions of the detector. However, the times for the data preparation are not recorded separately for the different stages, and so for this analysis are aggregated into a single distribution for each specific trigger.

4.1 Algorithm execution time in the muon trigger

The principal processing times for the algorithms in the muon signature are shown in Fig. 10 for the standard full RoI width of 0.2 in both \(\eta \) and \(\phi \). Some triggers run a second isolation tracking stage in a wider \(\eta \)–\(\phi \) region, after the first round of precision tracking, to determine whether tracks are isolated with respect to other tracks from the interaction. In this case the data preparation and fast track finder are executed a second time but in a wider RoI in \(\eta \) and \(\phi \), but still extended by the full length in z along the beam line. The precision tracking then runs a second time on the tracks in this larger RoI. As in the case of the data preparation, information to distinguish between the first and second execution of the precision tracking within any specific muon trigger is not available so only the precision tracking time of the first pass is shown here.

The space-point preparation is generally very fast, as can be seen in Fig. 10a, each algorithm having a mean processing time per RoI of less than 10 ms.

Figure 10b illustrates that the fast tracking in the first stage processing is reasonably fast with a mean execution time of 40 ms and a maximum at approximately 300 ms. The precision tracking has a mean execution time of less than 7 ms.

The second stage muon isolation fast track processing with the fully extended z range, and wider \(\eta \)–\(\phi \) size is significantly slower, with a mean processing time of approximately 116 ms. This is only executed on RoI where a trigger muon candidate has already been confirmed such that the rate should be significantly lower than for the first stage RoI processing. The TRT extension is only performed for the precision tracks and the execution time from Fig. 10c is under 10 ms.

One additional factor in the processing of the muon trigger is that prior to the execution of the first stage processing with the fast tracking, the RoI is determined by the muon spectrometer trigger [6], which calculates an updated RoI position based on the first processing of the spectrometer data in the HLT. Partly because of the toroidal field in the muon spectrometer, the resolution of the tracks in z extrapolated back to the beam line for the spectrometer-only muon candidates is not good enough to refine the z position of the RoI, so the full extent in z along the beam line is required. Additionally, in the transition regions between the barrel and endcap detectors, with pseudorapidities near \(\pm 1\), the \(\eta \) resolution of the spectrometer-only candidates is poor, and so the RoI for the ID tracking can be made commensurately wider to ensure that the space-points for the ID track are contained within the RoI.

4.2 Algorithm execution time in the electron trigger

The processing for the electron triggers is slightly less complex than for the muons, since it does not run a second stage. As such both the fast and precision tracking run in the same RoI, produced after the first calorimeter reconstruction algorithm. The RoI dimensions are the same as for the first stage from the muon signature. Between the fast tracking and precision tracking, additional reconstruction algorithms combining the calorimeter and tracking information to produce electron candidates can be executed. Hypothesis algorithms are then executed to select on these electron candidates to reduce the rate before the precision tracking. The principal algorithm processing times for the different steps in the sequence can be seen in Fig. 11.

Here the data preparation stages are very quick – typically less than 7 ms. The mean processing time in data from late 2018 was approximately 26 ms for the fast tracking and under 16 ms for the precision tracking. The full TRT extension following the precision tracking took under 6 ms.

4.3 Algorithm execution time in the tau trigger

As an example of the benefits afforded by using the multistage approach for the tau processing discussed in Sect. 3.4, the time taken by the tau tracking in both the single-stage and two-stage variants is shown in Fig. 12. This is from an ATLAS data-taking run with 25 ns bunch spacing taken during the detector commissioning from 2015 where the mean pile-up interaction multiplicity was 29. For subsequent production luminosity running in 2016 and onwards, only the two-stage version was running for all tau triggers. Figure 12a shows the processing times per RoI for the fast tracking stages: individually for the first and second stages of the two-stage tracking, and for the single-stage tracking with the original wider RoI in \(\eta \), \(\phi \) and z. The fast tracking in the single-stage has a mean execution time of approximately 66 ms, with a very long tail. In contrast, the first stage tracking with an RoI that is wide only in the z direction has a mean execution time of 23 ms, driven predominantly by the narrower RoI width in \(\phi \). The second stage tracking, although wider in \(\eta \) and \(\phi \) even than the RoI used in the single-stage, takes only 21 ms on average because of the significant reduction in the RoI z-width along the beam line. Figure 12b shows a comparison of the processing time per RoI for the precision tracking. The precision tracking in the two-stage strategy executes faster, with a mean of 4.8 ms compared to 12 ms for the single-stage tracking.

The execution times for the tracking related algorithms for the tau signature from production luminosity running late in 2018 are shown in Fig. 13. In this case the pile-up interaction multiplicity, with a peak mean value of approximately 52 interactions per bunch crossing, is significantly larger than for the 2015 data-taking from the previous figure. In addition, at the start of running in 2017, the z-width of the second stage tau RoI was reduced further. Here the first stage fast track finder execution time in the full z-width RoI is 35 ms and that of the second stage is 23 ms. The precision tracking takes 10 ms.

A significant time – approximately 13 ms – is taken by the calculation of the TRT drift circles for the extension of the precision tracks into the TRT.

4.4 Algorithm execution time in the \(b\text {-jet}\) trigger and vertexing

The \(b\text {-jet}\) trigger runs as a multistage trigger with regard to the tracking. Before the b-jet specific parts of the trigger are executed, the trigger runs as a standard jet trigger, running the jet finding in the full detector [9].

Following this, the first stage of the b-jet trigger takes the collection of jets from the full detector, and creates a separate RoI about each jet with \(E_{\text {T}} >30\) G\(\text {eV}\). These are aggregated into a super RoI as discussed in Sect. 3.4. The data preparation and fast tracking for the vertexing runs in this super RoI, followed by a vertex algorithm. The vertex position is then used to refine the z position of each jet RoI, which are also widened in \(\eta \) and \(\phi \).

Subsequently, the data preparation, fast tracking and precision tracking are executed in each jet RoI individually. The precision tracks are then used for the subsequent b-tagging algorithms. For the standard jet triggers with the global sequential calibration, the tracks are used for the additional correction of the jet \(E_{\text {T}}\) before the b-tagging is performed.

The distribution of processing time for the ID data preparation can be seen in Fig. 14a, which includes the data preparation for both the vertex tracking stage and the second, RoI based, \(b\text {-jet}\) tracking stage. The mean execution time for each algorithm is under 10 ms.

Due to the larger volume of the detector to be processed, the vertex tracking for the fast tracking is the longest of the tracking stages, with a mean execution time of 46 ms, shown in Fig. 14b. However, caching of the objects reconstructed by the trigger means that the vertex tracking need be executed only once per event, with the output being shared between all jet triggers. The subsequent tracking within the individual jet RoI has a mean execution time of 29 ms and 12 ms per jet RoI for the fast and precision tracking algorithms respectively. The TRT extension for the precision tracks takes a total of approximately 10 ms per jet RoI, as illustrated in Fig. 14c.

Compared to the tracking itself, the execution time for the vertexing is rather fast. Shown in Fig. 15c, the execution time for the offline based vertex algorithm is a little over 1 ms, whereas the histogram based vertex algorithm takes less than 0.5 ms. Also shown in Fig. 15 are the times for the execution of the vertex algorithm in the muon, electron and tau signatures. In each case of the single-RoI leptonic triggers the offline based algorithm takes less than 1 ms.

4.5 Total tracking time per event

The total time spent by the tracking related algorithms in the event processing from this same typical run from September 2018 can be seen in Fig. 16.

This figure shows the average of the total execution time per event for each algorithm of the ID trigger, and how this varies as the mean pile-up interaction multiplicity changes throughout the run. The total time for each algorithm is dependent on the details of the trigger menu, which determines how often and for which trigger thresholds the tracking will be executed.

The execution times for the data preparation in the silicon detectors are clearly seen to decrease for falling pile-upmultiplicities. As expected from the execution time for each algorithm for the different signatures, the data preparation for the pixel detector is the slowest, taking 27 ms overall in the trigger per event for the high interaction multiplicities, with \(\langle \mu \rangle \) =52, at the start of the fill.

The fast tracking is clearly the longest with a mean of approximately 155 ms per event in total at high interaction multiplicities, and has a strong non-linear behaviour with increasing multiplicities. The precision tracking in contrast has a more linear dependence, and takes only a third as long as the fast tracking in total. This is due to the time per call being lower, and the precision tracking being executed less often due to the early rejection in the trigger, which terminates processing before running the precision tracking algorithm.

The TRT data preparation and track extension are both reasonably fast and exhibit a more linear behaviour, since they are only executed on tracks from the precision tracking.

Finally, the vertex algorithms are seen to be very fast overall. The histogramming algorithm is in principle only executed once per event and, as in the previous section, is seen to have a mean time per call at high pile-up of around 0.3 ms, exhibiting only a slight dependence on the pile-up interaction multiplicity. In contrast, the offline vertex algorithm also runs once per event for the \(b\text {-jet}\) vertexing, but in addition is executed once per RoI for the other signatures. The execution time shows a small dependence on the interaction multiplicity, and consists mostly of the time taken by the single execution in the \(b\text {-jet}\) vertex tracking.

Overall, the total time spent in all the tracking related algorithms discussed here is approximately 290 ms per event at high pile-up multiplicities, decreasing to a little more than 90 ms for the lower multiplicities.

5 High-level trigger tracking performance

5.1 Data selection

For the performance analyses of the muon and electron signatures, the full available integrated luminosity for the 2016–2018 running period is used. This is possible since the processing for these signatures did not change significantly during the data taking period.

For the case of the tau and \(b\text {-jet}\) analyses, which ran two-stage tracking with a modified second stage RoI, significant changes were made to the reconstruction of either the first, or the second stages over the three years. These included modifications to the second stage seed finding, and so only the results for 2018 are presented in full detail. For each of the three years, 2016, 2017, and 2018 individually, brief comparisons of the efficiencies in each year are presented. For the tau signature, the full available integrated luminosity from each year is used. For the \(b\text {-jet}\) analyses only a subsample from each year, sufficient to provide a small statistical uncertainty is used.

In all cases, only events where the data quality was determined to be good for physics analyses are used [61].

The tracking efficiency for the trigger with respect to the offline tracking is determined using a number of support triggers. These triggers are essentially identical to the physics triggers, and operate by reconstructing the tracks in the trigger as normal, but then selecting on the objects reconstructed in the muon spectrometer or calorimeter only, with no selection on the tracking information from the inner detector. In this way, it is possible to estimate the efficiency of the tracking, unbiased by the ID track reconstruction itself. One caveat is that because the trigger does not select on the inner detector track information, the prescale for the triggers generally needs to be kept high to restrict the rate. Since the trigger objects used for the selection do not include any tracking, the fraction of background selected by the trigger will of necessity be significantly higher than the fully selecting triggers, e.g. electron candidates from these triggers will have been selected without any requirement on track multiplicity, instead being based purely on the clusters reconstructed in the calorimeter. As a consequence there may be a large contribution from narrow QCD jets which resemble an electromagnetic cluster. For each of the analyses, any such background is reduced by the selection of a reference sample using the full offline reconstructed objects, against which the performance is measured. Consequently, the sample of good offline objects, including the offline tracks, that are used to monitor the trigger may be statistically limited in regions of phase space.

5.2 Track selection

For the measurement of the efficiency and resolution, the ensemble of inner detector trigger tracks within the RoI are first matched to the selected offline reference objects using a modified solution to the stable marriage problem [62] – the closest matches taken only from those tracks which match within a loose preselection cone of size \(\Delta R = \sqrt{(\Delta \eta )^2+(\Delta \phi )^2} = 0.05\) around an offline reconstructed track. For any given offline track, the closest matching trigger track is chosen as a match if, and only if, it does not match more closely any other offline track.

Efficiencies are then measured by taking the correlated ratio of the number of offline reference objects that have such a matched trigger track to the total number of offline objects passing the selection.

Since the residual distributions themselves may have very long non-Gaussian tails, the resolution is estimated by taking the root-mean-square of the central 95% of the residual distribution. This is then scaled by the inverse of the root-mean-square of the central 95% of a Gaussian distribution with unit standard deviation, such that for any Gaussian residual distribution the true width would be obtained.

Reference objects for the analyses are chosen to satisfy the full offline selection criteria for such objects, similar to those used for physics analyses, but with additional selection. These are discussed in detail later in the text.

In all cases, offline candidate tracks are required to lie within the RoI used for the trigger reconstruction, since tracks outside the RoI would not be reconstructable. An additional requirement ensures that offline tracks are used only if the transverse impact parameter for the point of closest approach to the beam line, and the z position relative to any relevant offline vertex, are both less than 10 mm.

For the remaining discussion no distinction is made between positively and negatively charged particles for the transverse momentum and only the absolute value is considered.

5.3 Tracking in the muon signature

For the muon signature the HLT uses triggers [4, 25] with muon transverse momentum reconstruction down to 4 G\(\text {eV}\). The performance of the trigger tracking is therefore studied using a range of support triggers covering the region down to this threshold.

The ID tracking efficiency for muons selected by the 4 G\(\text {eV}\) and 20 G\(\text {eV}\) muon support triggers, with respect to medium offline muon candidates with \(p_{\text{ T }} >{4}\,\,\text{ G } {\text {eV}} \) or \(p_{\text{ T }} >{20}\,\,\text{ G }{\text{ eV }} \). The efficiency is shown as a function of: a the offline reconstructed muon \(\eta \), b the offline reconstructed muon \(p_{\text {T}} \), c the offline reconstructed muon \(\phi \), d the offline reconstructed muon \(z_{0}\), e the offline reconstructed muon \(d_{0}\), and f the mean number of pile-up interactions, \(\langle \mu \rangle \). Efficiencies are shown for both the fast tracking and precision tracking algorithms. Statistical Bayesian uncertainties are shown, using a 68.3% confidence interval and uniform beta function prior

Figure 17 shows the tracking efficiency of the ID trigger for medium quality offline muon candidates [63]. The efficiency integrated over \(p_{\text {T}}\) is shown for the fast tracking and the precision tracking for two representative thresholds for the offline muon selection: \(p_{\text {T}} >{4}\,\text {GeV}\) corresponding to the lowest trigger threshold, and \(p_{\text {T}} >{20}\,\text {GeV}\). The efficiency is shown as a function of the offline muon candidate track parameters and the mean number of pile-up interactions per event. The selection of the offline muon candidates is slightly biased by the muon spectrometer trigger selection near the threshold, but this does not affect the measurements of the inner detector trigger performance. The efficiency is shown for both the fast and precision tracking and is significantly better than 99%. The efficiency is observed to be constant as a function of the mean pile-up interaction multiplicity and other variables, with perhaps a slight decrease in efficiency for tracks with a large impact parameter.

Shown in Fig. 18 are the resolutions for the trigger tracks in \(\eta \), \(z_{0}\), and \(d_{0}\) with respect to the offline muon candidate pseudorapidity on the left, and with respect to the offline muon candidate \(p_{\text {T}}\) on the right. The resolution with respect to \(\eta \) is shown for the precision tracking and the fast tracking for the same two representative thresholds, \(p_{\text {T}} >{4}\,\text {GeV}\) and 20 \(\text {GeV}\), used in the efficiency determination. The resolution is seen to deteriorate somewhat for larger pseudorapidities, largely due to the larger amount of material through which the muons must pass as they leave the beam pipe and traverse the inner detector. The endcap silicon detectors are arranged perpendicular to the beam to partially ameliorate this, the effect of which can be seen for absolute pseudorapidities larger than 1.2 which is approximately the boundary between the barrel and endcap silicon detectors.

The track resolution for pseudorapidity (\(\eta \)), track z-vertex position, and transverse impact parameter with respect to the beam line, \(d_{0}\), as a function of offline track pseudorapidity and transverse momentum. The resolutions are shown for muons selected by the 4 G\(\text {eV}\) and 20 G\(\text {eV}\) muon support triggers for both the fast tracking and precision tracking algorithms

The mean multiplicities for a pixel, b SCT, and c TRT hits on trigger tracks matched to offline tracks as a function of the matching offline track pseudorapidity. Shown are the hit multiplicities for the precision and fast tracking for the pixel and SCT. The TRT extension does not run for the FTF tracks and so the multiplicity for the FTF is not shown in c

These effects on the resolutions can be further understood by examination of the mean number of hits on the tracks. The mean multiplicity of pixel, SCT and TRT hits on trigger muon candidate tracks which are matched to offline muon candidates can be seen in Fig. 19 as a function of the pseudorapidity of the offline muon candidate. For the TRT multiplicity, only the precision tracks are shown since the TRT extension is not performed for the FTF tracks.

For central pseudorapidities there are approximately four pixel hits per track originating from the four pixel barrel layers. For \(|\eta |>1.9\), which delimits approximately the end of the acceptance for the outer pixel barrel layer, the multiplicity increases as the tracks transition into the endcap detectors. It should be noted that the acceptance of the IBL and the innermost of the three original pixel barrel layers extends beyond \(|\eta |=2.5\). This is the reason that the mean pixel cluster multiplicity for tracks at large absolute pseudorapidity is greater than four.

Because of the much larger radius of the outer SCT layer, the transition between barrel and endcap for tracks in the SCT begins much earlier in pseudorapidity, around \(|\eta |=1.2\). The four double layers of the barrel are responsible for the mean of eight SCT clusters in the barrel region, and the nine additional wheels in each endcap allow the tracks to maintain a mean of eight of more clusters out to the maximum acceptance at \(|\eta |=2.5\). It is largely the transition from SCT barrel to endcap which is responsible for the structure in the resolutions as the tracks transition from pure barrel hits, to a combination of barrel and endcap hits, and finally, to purely endcap hits for \(|\eta |>1.6\). The small asymmetry between positive and negative pseudorapidities is due to small asymmetries in the detector conditions regarding the detector response and non-operational modules.

The structure of the TRT is somewhat different – there are two barrel sections with only axial straws providing only r–\(\phi \) hit information – and two TRT endcaps which provide only z–\(\phi \) information. The z position for the end of the TRT barrel is approximately the same as for the SCT barrel, and the endcaps extend to approximately the same maximum z position as the SCT endcaps, such that tracks with \(|\eta |>2.1\) have few or no TRT hits. The drop in hit multiplicity for \(|\eta |\) around 0.8 is predominantly due to the transition from the barrel to the endcap TRT at ±800 mm in z. A small number of tracks at all pseudorapidities also have no TRT hits. This is more likely for tracks with low transverse momentum, such that the contribution of tracks with no TRT hits reduces the apparent mean hit multiplicity over all tracks and is responsible for the lower mean TRT multiplicity for the lower \(p_{\text {T}}\) muon trigger.

In general, the track hit multiplicities are broadly similar for all signatures for full-length tracks. There is a notable exception, however, for electron candidates where the hit multiplicities can be affected by bremsstrahlung. This is discussed in Sect. 5.4.

The offline dimuon invariant mass from events passing the analysis selection from the dimuon performance trigger. For the performance triggers, the RoIs used by the fast and precision tracking are the same and as such the offline dilepton candidates chosen for the analysis of the fast and precision tracking are identical

In addition to the standard performance triggers, which select on tracks reconstructed using the muon spectrometer only, there are additional dimuon performance triggers. These select events with two muon candidates consistent with both muons arising from the decay of a Z boson, by selecting candidates with a dimuon mass within a window around 91 G\(\text {eV}\) with the range \(70< m_{ll} < 110\) G\(\text {eV}\). For these triggers, the two muons must each be in a different RoI. One of the RoI – the tag RoI – selects on the full muon candidate including the full selection on the combined spectrometer and inner detector muon candidate, and the other – the probe RoI – selects solely on the muon candidate reconstructed in the spectrometer, without using any inner detector information. Selecting on the combined muon candidate in the tag RoI increases the purity of the selected events. The spectrometer-only selection in the probe RoI allows the efficiency to be measured for the probe muon candidate, unbiased by the track reconstruction in the inner detector itself. The presence of the first, reasonably pure fully selected tag muon reduces the rate of such events and the selection of the two muon candidates having to form a Z boson candidate greatly enhances the purity of the spectrometer-only probe muon candidates, particularly at high transverse momenta.

For the fully selecting, tag leg, the muon trigger selects muon candidates with \(p_{\text {T}} >13\) G\(\text {eV}\). The probe leg, selecting on the muon candidates reconstructed in the spectrometer only, also selects candidates with transverse momenta greater than 13 G\(\text {eV}\). Unbiased measurements are therefore possible from these triggers for muon transverse momenta greater than 13 G\(\text {eV}\). The data used for this analysis correspond to the full 2018 integrated luminosity.

The dilepton invariant mass distribution for offline candidates for the events passing the medium offline muon selection, with no additional background rejection, for the dimuon performance trigger but with no corrections for trigger efficiency or detector response can be seen in Fig. 20.

The muon track finding efficiency versus a the offline muon track pseudorapidity, b the offline muon track transverse momentum, c the offline muon track azimuthal angle, d the offline muon track \(z_0\) position along the beam line, e the offline muon track transverse impact parameter, \(d_0\), measured with respect to the beam line, and f the mean pile-up interaction multiplicity, all measured using the dimuon performance trigger. Statistical Bayesian uncertainties are shown, using a 68.3% confidence interval and uniform beta function prior

The efficiency as a function of the mean pile-up interaction multiplicity, \(\langle \mu \rangle \), and the offline track variables can be seen in Fig. 21. With this analysis, the statistical uncertainties are very much reduced with respect to the analyses presented earlier in this section. The efficiency for both the fast and precision tracking can be seen to approach 100% with small statistical uncertainties even at large transverse momenta, approaching 1 T\(\text {eV}\). The efficiency versus the transverse impact parameter clearly shows a very small decrease of slightly less than 0.5% for the fast tracking for \(d_0\) values of 1.5 mm, and slightly larger than 0.5% for the precision tracking. This is consistent with the suggestion from Fig. 17. The efficiency for both the precision and fast tracking is again seen to be approximately constant with respect to changes in the \(z_0\) position, but with a very small loss of efficiency for the precision tracking at high pile-up interaction multiplicity consistent with Fig. 17.

The resolution in the pseudorapidity and inverse transverse momentum as a function of offline track pseudorapidity and transverse momentum. The resolutions are shown for muons selected with the \(p_{\text {T}} >{15}\,{\text {GeV}}\) muon requirement from the dimuon support triggers, for both the fast tracking and precision tracking algorithms

The resolution in the track pseudorapidity, track z position along the beam line, and the transverse impact parameter for these data are shown in Fig. 22 as functions of the offline track pseudorapidity and transverse momentum. The performance of the resolution at large transverse momentum, with the exception of \(d_0\), is seen to be generally consistent with that seen in Fig. 18 but again with significantly smaller uncertainties, most notably at large track transverse momenta. With regards to the resolution in \(d_0\), the dilepton analysis is performed using only the 2018 data where the mean pile-upinteraction multiplicity is larger than for the combined 2016–2018 data. Since the transverse impact parameter resolution has some dependence on the pile-up interaction multiplicity, the resolution seen in Fig. 22 is somewhat worse than that shown in Fig. 18 for the combined 2016–2018 data.

The ID trigger tracking efficiency for electrons selected by the 5 \(\text {GeV}\) and 26 \(\text {GeV}\) electron triggers, with respect to offline electron candidates with \(E_{\text {T}} >{5}\,{\text {GeV}} \) for the 5 GeV trigger and \(E_{\text {T}} >{26}\,{\text {GeV}}\) for the 26 GeV trigger. The efficiency is shown as a function of a the offline reconstructed electron track \(\eta \), b the offline reconstructed electron track \(\phi \), c the offline reconstructed electron \(z_0\), d the mean number of pile-up vertices, \(\langle \mu \rangle \). Efficiencies are shown for both the fast tracking and precision tracking algorithms. Statistical Bayesian uncertainties are shown, using a 68.3% confidence interval and uniform beta function prior

5.4 Tracking in the electron signature

Because of the significant background from QCD jet events, a tight offline likelihood identification [11] is used for the reference electron selection for the electron signature analysis. The offline electron candidate track is required to have at least two pixel hits, an IBL hit if passing through at least one active IBL module, and at least four clusters in the SCT. These requirements were chosen to ensure better reconstruction in the pixel detector for offline tracks by eliminating extremely poorly reconstructed bremsstrahlung candidates where the hits in the pixel detector were missed. Analyses for several triggers are presented, each employing a kinematic selection of \(|\eta |<2.5\) and \(p_{\text {T}} > {5}\,{\text {GeV}}\) for the offline electron candidate tracks. In addition, there is a selection on the electron candidate transverse energy, \(E_{\text {T}}\). For the candidates from the 5 G\(\text {eV}\) trigger a selection of \(E_{\text {T}} >5\) G\(\text {eV}\) is applied. For the 10 G\(\text {eV}\) trigger the selection is \(E_{\text {T}} >10\) G\(\text {eV}\), and \(E_{\text {T}} >26\) G\(\text {eV}\) for the 26 G\(\text {eV}\) trigger. For the analyses with the harder \(E_{\text {T}}\) selection, the same 5 G\(\text {eV}\) track \(p_{\text {T}}\) selection is used. Consequently, the fraction of the electron candidate energy that can be radiated as bremsstrahlung for candidates near the trigger threshold for the higher \(E_{\text {T}}\) threshold triggers is larger than for the lower \(E_{\text {T}}\) threshold triggers. As an additional requirement, to remove offline candidates where the track \(p_{\text {T}}\) has been badly overestimated, offline candidates with \(E_{\text {T}}/p_{\text {T}} <0.8\) are removed, where \(E_{\text {T}}\) is measured in the calorimeter, and \(p_{\text {T}}\) is from the offline track.

The resolution for pseudorapidity and inverse transverse momentum as a function of offline track pseudorapidity and transverse momentum. The resolutions are shown for electrons selected by the 5 \(\text {GeV}\) and 26 \(\text {GeV}\) electron support triggers for both the fast tracking and precision tracking algorithms

The efficiency with respect to a the offline electron transverse energy, \(E_{\text {T}}\), b the offline electron \(E_{\text {T}}/p_{\text {T}} \), and c the offline electron track \(p_{\text {T}}\). Shown in d is the distribution of \(E_{\text {T}}/p_{\text {T}} \) for the offline electron candidates. Results are shown for electrons selected by the 5 \(\text {GeV}\), 10 \(\text {GeV}\) and 26 \(\text {GeV}\) electron support triggers for panel a, and by the 5 \(\text {GeV}\), 26 \(\text {GeV}\) support triggers for panels b and c. For the efficiencies versus offline electron candidate \(E_{\text {T}}\) and \(p_{\text {T}}\), only candidates where \(E_{\text {T}}/p_{\text {T}} >0.8\) are used. Statistical Bayesian uncertainties are shown, using a 68.3% confidence interval and uniform beta function prior

Figure 23 shows the ID tracking efficiency for the 5 \(\text {GeV}\) and 26 \(\text {GeV}\) electron triggers as a function of the \(\eta \), \(\phi \), and \(z_{0}\) values of the offline electron candidate track, and as a function of the mean pile-up multiplicity. The tracking efficiency is measured with respect to offline electron tracks with \(p_{\text {T}} >{5}\,{\text {GeV}}\) for trigger electron candidates with either \(E_{\text {T}} >5\) G\(\text {eV}\) or \(E_{\text {T}} >26\) G\(\text {eV}\), from the 5 \(\text {GeV}\) and 26 \(\text {GeV}\) electron support triggers. The offline electro tracks are those from tight offline electron candidates. The efficiencies of the fast track finder and precision tracking exceed 99% for all pseudorapidities.

Figure 24 shows the resolutions of the track pseudorapidity and \(1/p_{\text {T}} \) as a function of either the \(\eta \) or \(p_{\text {T}} \) of the offline track from the offline electron candidates. For this analysis, the same selection on the electron candidate \(E_{\text {T}}\) and \(p_{\text {T}}\) discussed previously is applied. Consequently, for events with a 5 G\(\text {eV}\) selection, there is no phase space for the electron candidates near the threshold to radiate any bremsstrahlung photons. For the 26 G\(\text {eV}\) electrons however, there is significant phase space available for radiation. As such, for the 26 G\(\text {eV}\) threshold trigger, tracks which have \(p_{\text {T}}\) far below 26 G\(\text {eV}\) will correspond to electrons that have undergone significant radiation. The resolutions for these tracks will be significantly worse than for the tracks with the same \(p_{\text {T}}\) from the 5 G\(\text {eV}\) trigger, but that have not undergone such emission. For the 5 G\(\text {eV}\) threshold, the resolution of the inverse \(p_{\text {T}}\) for the fast tracking is slightly worse than for the precision tracking. As expected it is seen to deteriorate at larger \(\eta \) for both the higher and lower threshold triggers. Despite the significantly worse resolution at low \(p_{\text {T}}\) for the tracks from the 26 G\(\text {eV}\) trigger, the resolution, when integrated over \(p_{\text {T}}\), is still significantly better than for the lower \(p_{\text {T}}\) threshold since only a relatively small fraction of events from the 26 G\(\text {eV}\) trigger have low \(p_{\text {T}}\) tracks. Therefore, the usual improvement of the resolution with increasing \(p_{\text {T}}\) is enough to provide the observed significantly better overall resolution.

The resolution for the precision tracking at low \(p_{\text {T}} \) and large pseudorapidities from the 26 G\(\text {eV}\) trigger is worse than for the fast tracking as a consequence of the different SCT hit multiplicities observed on each type of trigger track. This is discussed in more detail later in this section.